MUSIC + MOTION

Expressing identity through music for people with ALS (pALS)

People living with ALS (pALS) have limited mobility due to the degeneration of their motor neurons. This study proposes a design that allows eye-gaze users toexpress themselves through music in an intuitive and emotionally-driven manner. The concept is based on the insights of a music-lover who was diagnosed withALS. He found immense joy in conducting a flautist through his limited head and eye movements, which planted the idea to recreate the experience in a digital eye-gaze application.

This paper outlines the methodology for designing the app and creating a rudimentary prototype. It also evaluates the design with non-representative users, resulting in feedback that helped refine the design. Future directions call for an exploration in AI for note prediction and the hopes to use this study as a skeleton to implement a comprehensive and functioning prototype for further evaluation.

Use Case

Accessibility and Assistive Technology Module

Time Constraint

10 Weeks

Role

Entire project

Tags

assistive technology, AAC devices, ALS, MND, music, disability interaction (DIX), conceptual, research

THE BRIEF

The aim of this activity was to co-create solutions with people living with ALS, and design an application or device that will address our ALS mentor’s needs and pain points.

People living with ALS (pALS) have limited mobility due to the degeneration of their motor neurons. This study proposes a design that allows eye-gaze users to express themselves through music in an intuitive and emotionally-driven manner. The concept is based on the insights of a music-lover who was diagnosed with ALS. He found immense joy in conducting a flautist through his limited head and eye movements, which planted the idea to recreate the experience in a digital eye-gaze application. This project outlines the methodology for designing the app and creating a rudimentary prototype. It also evaluates the design with non-representative users, resulting in feedback that helped refine the design. Future directions call for an exploration in AI for note prediction and the hopes to use this study as a skeleton to implement a comprehensive and functioning prototype for further evaluation.

Research + Ideation

Michael Wendell, or Mike, is a vibrant, creative, and animated person who used to love DJ-ing at social gatherings, mixing afrobeats with reggae, while exuding an energy that lights up the room. In 2019, Mike was diagnosed with Amyotrophic lateral sclerosis (ALS), a rare neurological disease with progressive degeneration of the motor neurons [5]; hence in the UK, people refer to ALS as Motor Neuron Disease (MND), both terms that will be used interchangeably throughout this project. People living with ALS (pALS) have completely intact and active cognitive abilities [5]. However, thoughts and conversation become harder to express due to atrophy or weakening of the bulbar muscles that cause speech and swallowing difficulties [6]. With the emergence of augmentative and alternative communication (AAC) devices, pALS can communicate using various input methods, including eye-gaze— but AAC interfaces are clunky, hard to navigate, and take much longer to articulate than natural speech.

Although it is vital that we continue improving AAC softwares, we should not only focus on speech as a form of communication but also the universal language of music. In fact, the prescriptive and medicalised approach to designing for pALS often discounts the importance of creative pursuits and the element of play [12]. When an individual loses the ability to walk, speak, and swallow, it is easy to forget that living encompasses more than mere survival.

Every poem that Mike writes is signed off as Mike, not dead. He is reminding us that, although his body on the outside may be inactive, his mind and soul are very much alive.

As outlined in the Disability Interaction (DIX) Manifesto, co-created solutions is a vital principle to successful innovation in the accessibility and assistive technology space. The manifesto highlights the importance of “working with disabled people and … organisations to define the problem, create the solution, and form a community of practice that evolves the solution.” [4]. Therefore ideation began with a co-design session with the inspiration for this project, Mike, and his wife, Mary.

To begin ideation, I asked about Mike’s musical pursuits and what it was like before he was diagnosed with MND, and throughout the disease progression. As mentioned in the introduction, Mike used to DJ and loved curating the space for his friends and family. When he was diagnosed with MND, he looked into EyeHarp, a software that uses coloured squares in a circular pattern as keys, allowing the user to rest their eyes in the middle, and navigate across notes easily. Each square correlates to a specific note, which proved to be challenging for Mike who wanted to simply feel the music without having to think about its technicality or determine which notes go together to create a harmonious melody. The steep learning curve became a barrier to enjoying EyeHarp as a sustainable hobby. This experience was translated onto a user journey map (A.1) which was then used to identify gaps to create a preliminary research framework (A.2).

In addition to discussing EyeHarp’s pain points, I also wanted to identify the desirable attributes of a music application — thus, we discussed other times that Mike pursued creative hobbies. Mary pointed to the time when Mike used his limited head movements to direct a flautist. The flautist followed his motion, going higher up in pitch as he moved his head up, and lower in pitch as he brought it down (figure 1). “I rarely see his eyes light up the way it did that day,” said Mary. She emphasised that “you start to lose control of your own body as you progress through the stages of ALS, and you have to relinquish a lot of autonomy. Although virtual reality and the metaverse may be the hot topics, the greatest impact will come from creating something that will give him back that sense of control”.

User Journey

User Requirements

The ideation session helped identify Mike’s needs and wants in a music production application. After analysing the transcript of the recorded ideation session, the following user requirements were developed:

Provide a sense of control and autonomy for the user

Should be easy to use and intuitive– everything is challenging with ALS so the user should not have to be a professional musician in order to create music

Consider ergonomic challenges that come with the eye gaze input method

Design + Implementation

With the core of the design built on autonomy and creation, a non-interactive interface prototype would not have provided the accurate insight required to evaluate the concept. Therefore, I decided to create a rudimentary working prototype using Python. I planned out my implementation plan leveraging existing Python libraries. The plan is outlined as follows:

Get head movement/eye movement input from camera using OpenCV

Map coordinates onto frequencies

Output frequency as sound using SimpleSound

Visualise the frequencies using Stream Analyzer and PyAudio

Create a graphic user interface (GUI) prototype in Figma for final UX evaluation

3.1 Sound input/output (I/O)

For the input methodology, I used a tutorial [7] that walks through how to use OpenCV to identify your pupil from your webcam as input data. OpenCV leverages the Dlib C++ library [17], which contains machine learning algorithms, including several implementations of image processing techniques. In this instance, we are using the dlib_face_recognition_resnet_model_v1 which uses Deep Neural Networks (DNN) for object recognition [17]. This algorithm detects the face and the pupil centre’s coordinates. The coordinates then can be used as inputs for the sound output functions which calculate the frequency using the x-coordinate. This value was then converted into a “note” by generating a 440 Hz sine wave that is then processed further to 16-bit data (appendix A3). This converted note is then fed as a parameter into the simpleAudio play object, outputting the final sound.

Music visualisation

The next step was to visualise the audio for the user so that they can see it live as they produce their composition. This visualisation should feel intuitive and inspiring — to feel as though one can truly express themselves and their emotions. I employed an open source package called Realtime_PyAudio_FFT where FFT stands for Fast Fourier Transform [11]. Fourier analysis can function in both the time and frequency domains, which can be seen most clearly in periodic systems such as a string being plucked [8], important for signal processing. Thus, the function is used to do real time audio analysis and visualisation of FFT features from a live audio stream. The main reason why I chose to run this package was because of its impact on the emotionality of the UX. The visualisation adds a fluidity of movement that will allow the user to see how their eye-gaze or head movements impact the audio. I wanted to come back to the core of this design, which was to encourage an emotional expression of oneself through music.

Interface prototype

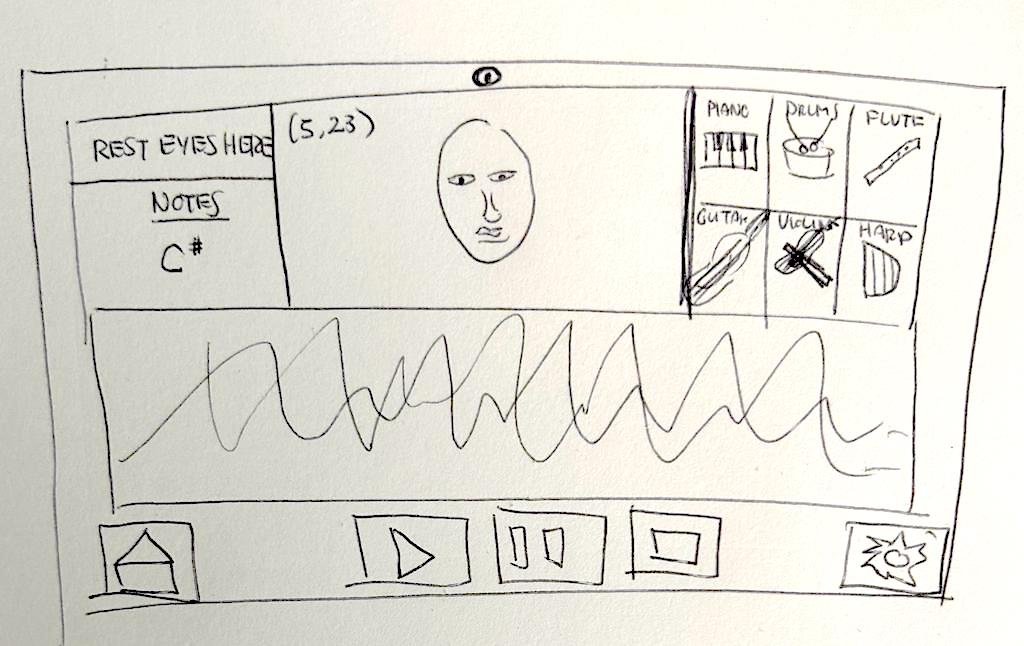

The final step to this rudimentary prototype was to create a graphic user interface (GUI) mockup using Figma. Although I was not able to embed the working prototype into an interactive GUI, I still wanted to visualise the front-end, which will be important to evaluate the final UX. I began by sketching out a rough drawing of the interface (figure 5). I then used Figma to display screenshots of the implemented apps from 3.1 and 3.2 (figure 5). I ensured that the interface consisted of crucial features including the user’s face so they can orient themselves within the xy coordinate space that the frequencies are based on, an instrument selection pad that can easily be navigated to, and the display of the audio visualisation. Furthermore, the ease of navigating the controls to play, record, and stop the production was a priority, as it would be the basis of the application. An auto-save functionality should be considered if this were to be built out.

Code: GitHub Repo HERE

User Testing

User testing is a crucial part of human-centred design and the DIX design framework [4]. However, due to the time and resource constraints of this project, I was unable to test a full-fledged prototype with Mike or other pALS. Instead, I had to approximate the evaluation with non-representative users by testing the eye-gaze prototype, audio analyzer visualisation, and the GUI mockup, separately.

Testing eye-gaze programme and GUI with non-representative users

Two participants (males, ages 25-28) were recruited from University College London. Participants provided informed consent and permission to use their images in this report.

After showing the participants the working eye-gaze input application, I asked them several questions in regards to usability, control/autonomy, and emotionality. The feedback was analysed providing insight into whether the application met the user requirements gathered at the start of this project. P1 said, “seeing the sound waves visualised as I moved my head, and hearing the pitch change, definitely made me feel emotionally connected to what I was creating”. However, P1 mentioned that the lag in the note and completion of the note took away from the enjoyment and expressiveness of using the app. When asked whether the input format allowed for a sense of control and autonomy, P2 said, “it does feel like I have control but there needs to tools to adjust where you are in the timeline of the production, a looping functionality, and more easily navigable recording layout for greater control of the piece”. Similarly, P1 mentioned that the interface didn’t provide enough support for the music production aspect of the app. This led to two major areas of improvement used to refine the designs:

Increase sample rate for a more enjoyable experience

Add timeline and other tools on GUI to make it easier to improve production experience

Image: Code snipped to change the linspace function for the audio calculations.

Image: High-fidelity interface redesign of interface, adjusted from user feedback.

Refine design after first round of testing

To ensure that the programme would run more smoothly and allow the user to express themselves more fluidly, I adjusted the sample rate baked into the code for the simpleAudio note calculations (figure 8). By changing the linspace function to the arange function, I was able to provide a non-integer parameter for t, reducing the seconds to 0.1 so that the array t spread out between 0 and 0.1 seconds in increments of 1/44100 (figure 8).

I then updated the GUI prototype according to the feedback (figure 9). The instrument selection pad was removed, as the minimum viable product does not need to have options. It was replaced with a loop function, which would be useful to loop recorded segments. I also moved the home and settings buttons to the top left, as the note indicator was not necessary. This made room for more comprehensive controls at the bottom that would be easily accessible. Lastly, two timelines were added to along with a yellow marker to indicate where the user is within the scope of their creation. This yellow marker can be moved using the fast forward/backward buttons.

Strengths and limitations

This design is a collaboration between university students, an MNDA expert, and Mike, an incredible person who faces the challenges of living with ALS/MND daily with the support of his wife, Mary. The diverse backgrounds of all members involved and the first-hand experience that Mike shared, was the strongest aspect of this project. This exemplifies the importance of co-created solutions [4]. Furthermore, this design could be used and enjoyed by people of all abilities including those who want to create music using a different method to the traditional format.

However, this project also has its limitations. A major limitation was the inability to test with pALS. I was in the process of building out a Flask application [18] to enable remote testing with Mike and Mary through an API call that would run the application on a public domain. This would ameliorate concerns with having Mike download Python and all its dependencies in order to test the product; easy setup is important when doing remote evaluation with users with disabilities [9]. Although I was able to get the eyegaze portion running on a local server, I was unable to execute the FFT Analyser in parallel, nor deploy to a public domain. Finding time to do in-person testing also proved to be challenging, which is why I tested with non-representative users. Therefore, subsequent iterations of the application should involve Mike as well as other pALS for a richer final product; continued co-creation with the target users is a key aspect of DIX design and I recognise that it is a common mistake to extract knowledge, time, and information from the target users as “user research”, and then carry the project forward separately. Another limitation was the inability to build out a robust functional prototype. Due to the limited time of this project and the scope of my technical skills, I was unable to build out a working prototype with the integrated GUI. The experience of both the audio-visual aspects as well as the usability of the composition-production aspects are important to the final UX. To address both limitations, future work should build out and evaluate a comprehensive and interactive prototype with a larger team of pALS, designers, and engineers.

Future Work: Artificial Intelligence (AI) for note prediction

Additionally, future work should explore AI for note prediction. AI has already reached the music world, with the rise of technologies such as Jukebox [3] and GoogleLM, an advanced text-to-music generator [1,15]. However, most of the AI models automatically generate melodies with text inputs. Similar to the text-to-image products, DALL-E and MIDI Journey, it can be fun and exciting, but it may not provide the same longitudinal relationship that a creator would have with their work; it has an input and immediate output. Instead, I would like to investigate how AI could be used to predict the next note, given a specific genre. We could leverage the existing text-to-music framework to provide inputs such as “in the style of Beyonce” or “early 2000s RnB”, in conjunction with the x-coordinate of the user’s motion to output a chord or note that would fit the intended melody, akin to autotune. The key to this approach is that the user remains in control of the output. When the user moves to the right, the pitch increases, and when the user moves to the left, it decreases. AI should assist the user in the creative process, not create for them [14]. With this addition, the hope is that pALS —and all users— can express themselves fully through the language of music.